Captionaize

Burnt Out

I've been creating content for quite some time now, from youtube videos in highschool, ad campaigns in university, to tiktok and instagram shorts now. During those different stages, I have fallen in and out of love with different aspects of the creation pipeline. In my latest stint, I have become super focused on visual storytelling, trying to convey emotion without or limited dialogue. I try to make my content super cinematic and pay close attention to sound design.

Planning all this out for every piece of content I made quickly started to take a toll on me. I would frequently find myself with a finished product, ready to post, but have no mental energy left to come up with a creative caption or do any market research to pick some relevant hashtags.

Computer Vision?

This was the problem that sparked inspiration for this project. I began to think of a way I could put together a tool that could watch my video in a sense, understand what is going on — perhaps pick up on some themes or the type of emotion I am trying to invoke — and provide me with some caption ideas based on what it could gather. Sounds like a perfect opportunity for some computer vision!

Well, not exactly — not traditional computer vision — I needed something that could also understand.

Hmm, large language models are good at understanding, but they are language models.

It was at this point where I put this project on the storage shelf until I could make a breakthrough with respect to the modeling task.

LLM Fever

Enter GPT-4.

I remember when the multimodal model wave started to take over the LLM scene mid-late last year. Watching the GPT-4 demo with vision enabled was super eye-opening and got me really excited for what was to come. Then Google hit us with the Gemini teaser, and I remember losing my mind over the trailer. At that point my mind was flooding with potential uses for this new tech, but it never occurred to me to use a same style image to text model to finally tackle this project. Untiiiil… GPT-4o was pushed into chatGPT and I remembered that these models can take in image input… *facepalm*

ClosedAI

I still did not have a solid plan on how exactly I would tackle this problem — GPT-4o/V couldn't handle video formats directly, so some preprocessing steps had to be done. I came across a github repo for a paper that was published outlining a tool called MM-Vid — this was a paper published by some Azure AI researchers that used GPT-4V to perform 'advanced video understanding'. This was exactly what I needed!

But there were two huge problems in my way:

1) They did not have a live demo to use the tool, or any sort of API to tap into the service

2) Everything was open-sourced but I needed an OpenAI key *cry in broke*

Modeling Woes Continued

My thinking at this point was to find something open source. From the MM-Vid repo, they had some nice functions I could use for video preprocessing and really all I needed was any half decent image to text LLM that I could use for free and viola!

Seems like a straightforward plan right. Oh how naive I sometimes am 🙃

The problem with going fully open source is that you usually need to have the model downloaded locally. This was going to be a problem, since in the world of LLMs, small models are still huge. If I were to actually use this tool, at some point I would need to host it somewhere…

Back to the drawing board.

The Stars Alligned ♊

For some reason, using Gemini slipped my mind until this point. I just assumed their pricing structure would be similar to OpenAI's, but I was completely wrong — their API had a generous free tier that even gives you access to their newest models.

I started playing around in their sandbox app for Gemini 1.5 and noticed that I could upload videos directly to the model.

Me to me: If i can do it here, surely I could do it through the API... right?

I was eager to figure this out since it would let me scrap all of the the video chunking and preprocessing on my end and let Google do all the work for me 😈

The main documentation for the API only outlined image to text modeling, which was a hard blow to bear, but after some digging around, I came across a file API that is integrated into Gemini. What can the file API do?

Work with videos 😈 😈 😈

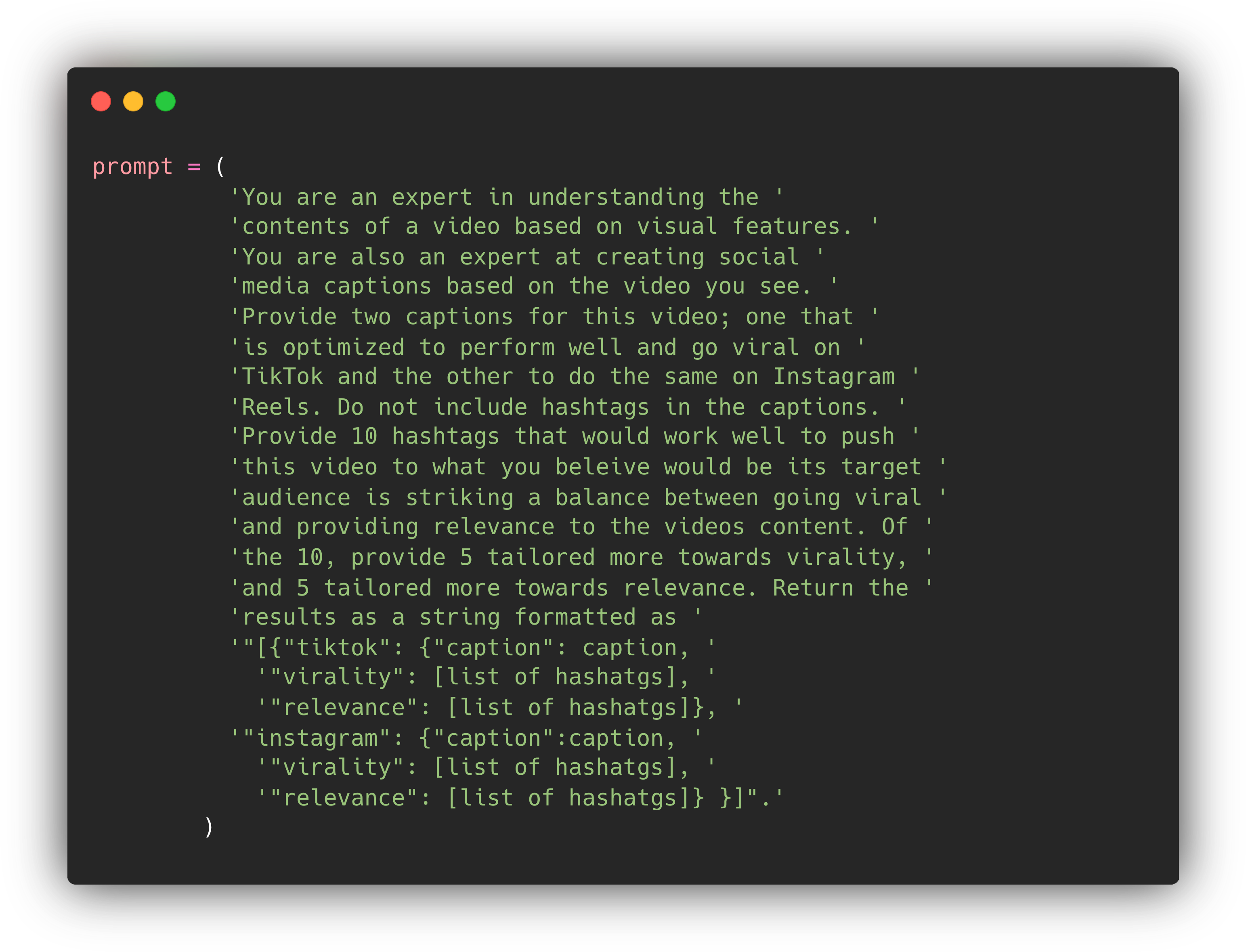

Prompting

With the logistics out of the way — I could focus on setting up a nice prompt to try and get the results I was after. The idea was to tell Gemini what it was an expert at first, then give it some detailed instructions as to what I wanted it to do.

I gave the model an extra degree of freedom when coming up with captions allowing it to vary its response based on what platform the caption would be used on. Broadly speaking, I feel that TikTok is more fast paced when compared to Instagram, while the latter feels more formal and put together. I was curious if the model would pick up on this and reflect it in its generation.

I had a similar idea with hashtags — that is, to include an extra degree of freedom and let the model suggest hashtags governed by two sets of goals; virality and relevance. I'd like to pat myself on the back a little for this one because after using the final tool I saw some pretty interesting results.

Power of Some Freedom

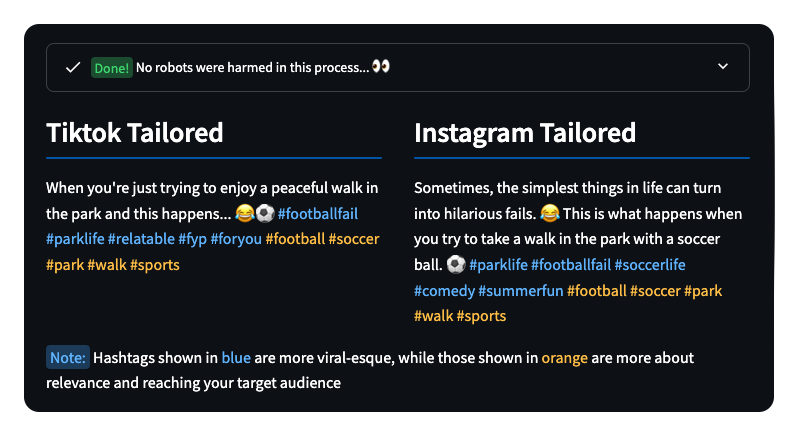

Take a look at the viral hashtags being generated for TikTok in the output below.

You've got #foryou and #fyp. I was shocked at this point because these extra degrees of freedom were clearly being taken advantage of by the model. These were TikTok exclusive hashtags only being generated for the TikTok section of suggestions.

Not only that, they were also being generated for the subset of hashtags geared towards virality. Long story short, this was a positive litmus test and I had the biggest grin on my face using the application.

A New Package

Speaking of the application, I used a Python package called Streamlit to make it.

I have heard of Streamlit before during my last job, some senior devs would prototype proofs of concept using it, but I had never used it myself. I would stumble on a lot of LLM based applications made on Streamlit, so I figured I would try it out for this project.

The real beauty of it is how fast you can package up a script into an application and host on the web. It was fun to learn and I would recommend trying it out at least once to anyone who likes building applications and wants to do so completely in Python.

There is a link to both the app and GitHub repo at the top of this page, please check them out!

Occam's Razor

I'll be honest, going the LLM route was not the first idea I tried to flesh out.

Initially, I built a TikTok scraper to grab a bunch of trending videos on the app and extracted their caption and hashtag data. This ended up being a whole side quest since TikTok does not provide any API endpoints to fetch trending videos for their non-research based API (the research API required a whole application process). I had managed to scrape around 3,000 caption and hashtag pairs for content that is currently trending.

The idea was to use a pre-trained sentence transformer to generate embeddings for all of the captions and store them in a vector database. Then using an LLM, I could generate some candidate captions for my video or come up with one on my own. By creating an embedding for this caption, I could do a nearest neighbor search in the vector DB, querying similar TikToks that are currently trending, compiling a list of their corresponding hashtags.

Sadly, this didn't work too well and giving the entire task to the LLM proved to be both simpler and more effective.

Perhaps I didn't have enough data for the vector DB query to be powerful, or perhaps using captions as the data item being embedded doesn't capture the true variance in the problem. Either way, this was a fun little adventure and if anyone has any ideas related to it, please reach out!

Shower Thoughts

There was an interesting... feature... I noticed while testing the app.

Any time the video I was inputting had captions on screen, the message behind the captions tended to dominate the generated captions and hashtags.

In my case, the themes behind the captions aligned with the theme of the video itself, however I am curious as to what may be generated should the video's visual theme be misaligned with what the captions are describing.

I think this could provide some insight with respect to the inner workings of the model.